Happy New Year Groundheads™, I thought we’d start this year off by reminding ourselves of all the cool stuff big tech has promised us that is totally not empty hype, totally real, and totally just around the corner. It’s 2025 now, and we’ve seen a lot of cool tech come out, but so far we haven’t seen…

Generative AI software that makes something someone else was willing to pay money for.

“Is someone willing to spend money on this thing” is not a perfect marker of what humans consider “valuable”. There are lots of things, from libraries to public broadcasting channels who’s value is not tied to their ability to turn a profit. But seeing what real people will plop down real dollars for can be a helpful indicator to see what “consumers”1 want. And as far as I can tell, nobody —like, nobody at all— wants to spend any hard-earned money on AI slop2.

Yes, deceptive “AI” “written” “books” are crowding out human authors on Amazon3, and Spotify is padding their playlists with “AI” “composed” “music”. But is this what consumers are paying Amazon and Spotify for? A lot of people and companies seem willing to pay for the ability to create slop, but is there, in economic parlance, any consumer demand for the slop itself? Not that I have seen! What’s confused me for a long while, is that if nobody wants the actual stuff that “generative AI” machines generate, where is the (in economic terms) value created? Why are investors pumping so much money into companies who’s only output is, well, worthless slop? Is it a bubble?

There’s an important distinction that’s not often made, and that’s that generative AI does not create valuable stuff, it creates stuff with the signals that humans typically associate with value. Here are some examples:

When we say an object is “art”, we’re using shorthand for “a work of art” (emphasis on “work”). Despite what CEOs believe, “art” describes the process —the work— of creating, not the output. The song/painting/sculpture is just a signal that the work was done. While cheap mass produced paintings exist, nobody would really consider them “works” of art. But seeing a painting exist is one pretty good signal that some human, somewhere, cared to dedicate some time and creative energy into it. An “AI” generated image, no matter how aesthetically pleasing, does not have value outside of the imagery itself.

To be clear, even without the effort of a creator, a pretty picture on its own has some value because people value pretty things. But for some creations their value stems purely from the effort that someone put into creating them. For example, job recruiters don’t read those cover letters because what they are looking for are signals that the applicant cared enough about the job opportunity to put in the effort. A cover letter communicates to the recruiter: “I am not robo-applying to 1000 jobs, what I want is this particular job, a lot.”

In the same vein, the value of a legal demand letter is not the letter itself, but the signal that the person who sent it can afford a lawyer. An “AI” generated demand letter, has no intrinsic value. As Cory Doctorow put it recently:

The fact that an LLM can manufacture this once-expensive signal for free means that the signal's meaning will shortly change, forever. Once companies realize that this kind of letter can be generated on demand, it will cease to mean, “You are dealing with a furious, vindictive rich person.” It will come to mean, “You are dealing with someone who knows how to type ‘generate legal threat’ into a search box.”

The fact that things like demand letters or cover letters remain effective indicators of effort in 2025 is only because not everyone is yet aware of LLMs. In fact, there are now bots that do all of the job hunting/applying for applicants meaning job recruiters will soon have their work cut out for them as their job (like so, so many others) increasingly becomes the job of a slop-sorter. Which brings us to the real reason financial investment in these “AI” companies is so sky high, and it’s not about creating value…

Imagine that you want to cancel your long distance phone plan you haven’t used since 1998 and you call a customer service line. After one ring, you immediately hear a perky, friendly voice happy to assist you. It could be a person (people are expensive to hire and so therefore a signal that this company values customer service) or it could be a chatbot who’s purpose for existing is to maximize customer retention. There’s no way to tell. A person has feelings and thoughts of their own. A person gets tired. A person wants to retain customers (they don’t want to get fired) but they also want to make it to the end of the call without getting yelled at. But an AI has no understanding or empathy for your situation. It does not tire. For every customer retained, its training model is updated, tweaking exactly the right phrases and vocal intonations it took to keep future customers from cancelling. The goal is not just to rip you off, but for you to leave the call believing that in this case being ripped off is good, actually.

Generative “AI” is like the classic cup and balls scam. It doesn’t “generate value” for society. It is not a rational economic transaction. The “revenue” comes from someone who earned that money using (the only thing humans really consider valuable) human effort. The cup and balls scammer “profits”, not by providing a service, but by overwhelming the mark’s ability to track what’s happening. The most talented scammers can even temporarily bamboozle their marks into believing that they had fun “playing”, and therefore the “cost” was worth it.

If investors were legally allowed to invest into cup-and-ball “businesses” they all would. It is a proven money “maker” even if it creates nothing that humans value. Most investors in “AI” companies don’t actually believe that generative “AI” is creating economic value either. They understand that the fancy chatbots and short-form video generators are the misdirections of a skillful scam artist. What “AI” investors believe, is that generative AI will soon outperform the weak, empathetic human salesman at squeezing “consumers” for their money. Investors believe that software that can efficiently and mechanically overwhelm consumers’ ability to make rational economic decisions will make them rich. They’re probably right. And y’know, speaking of getting rich, it’s 2025 and we still haven’t seen…

A cryptocurrency that has utility beyond its function as a speculative asset.

A “speculative asset” is an investment that is purchased with the belief that its value (there’s that word again) will increase significantly in the short term. Despite more than a decade-point-five of crypto-boosters promising that Bitcoin (or Etherem or HawkTuahcoin…) will one day be useful (or at least, more useful than money) so far, every cryptocurrency has turned out to be a new twist on a “greater fool” (ponzi) scheme.

But —similarly to how we looked at “AI” slop in part one— if you want to get a feel for the value of something, take a step back and look at what goes into crypto (burned-up dead dinosaurs) and what comes out (a ledger). It’s money, but instead of being government-issued, it’s being issued by bros on Reddit and TikTok and hyped by your second most obnoxious family member at Thanksgiving. And with extra, more energy-consuming, steps!

I concede that crypto seems to work well for laundering money or buying illegal things over the internet. So it’s not entirely without utility. I’m not even trying to suggest that those use cases are bad, just that they don’t collectively represent a multi trillion-dollar industry. Crypto’s function as a money-anonymizer is secondary to its utility as a speculative asset. And speaking of things that we were told would be multi-trillion dollar industries by now, can you believe it’s 2025 and we still don’t live in…

The Metaverse

That’s right! I bet you almost forgot about The Metaverse! Honestly, picking on the Metaverse in 2025 feels unfair. I bet very few people, even people who work at Meta, still believe "The Metaverse" is going to be a thing, let alone The Next Big Thing™. But I feel it's helpful to bring up ‘The Metaverse” as a reminder that big tech companies these days just aren’t in the business of manufacturing products people want, what they manufacture is FOMO, and then apply it to whatever high-margin nonsense they can.

I've talked about economic "demand" a few times in this post. Now is a good time to bring up a good quote from a good essay I recently shared on Flipboard written by a salesman who eloquently highlighted how artificial the concept of “demand” can be:

[Economists] think of demand as a vast natural force to be harnessed, like wind or oil. Sounds nice, but things look a little less elegant to the salesmen in the trenches. They know: Demand is more like blood, and it has to be mercilessly extracted, drop by drop, by an army of sweaty little goblins who don’t eat unless they hit their quotas. Suddenly, the economy looks more like an infinite series of tiny frauds than a harmonious ecosystem.

Remember, Facebo Meta is an advertising company.

I'm still, years later, not clear on what "The Metaverse" was even supposed to be. A Facebook VR video game? Something to do with Crypto? I distinctly remember being told that I could soon buy “digital clothing” with bitcoins and “wear” it in every video game. The Metaverse felt nebulous and phony in 2021 and in hindsight well, that’s exactly what it was.

I do think it's funny how Facebook tried to sell The Metaverse so hard that they changed their name to “Meta”. Let the name serve as lasting monument for how much faith you should put into the promises of big tech. Especially when those promises use words like “open” or “decentralized”, because it’s 2025 and there’s still no sign of…

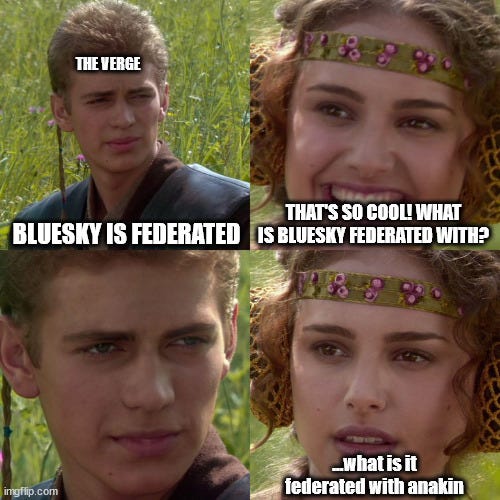

BlueSky federating with anything

If you’re not familiar, BlueSky, is the latest for-profit Twitter clone and (importantly) it seems to actually be catching on among the coveted demographic of Gen-Xers and Aging Millennials Addicted to Twitter But Who Also Feel Bad About it.

The thing that makes BlueSky different from Twitter is that they use the words “open”, “federated”, and “decentralized” in their press releases. BlueSky is (surprise) neither of these things. They are intentionally muddying the water surrounding the definition of those words, and yet journalists (good ones! Whom I respect!) continue to parrot these claims of “openness” without investigating. At the risk of sounding dramatic, I genuinely suspect this factual gap is a form of denial and willful ignorance emerging collectively from journalists desperate to get back the same high they used to get from Twitter without the guilt associated by supporting 𝕏 with their presence.

If you are curious to know why the social web being decentralized matters, check out the “Why Should I Care?” section of my simple guide to Mastodon. But what’s important here is that whatever you feel “decentralized” means, BlueSky is not it. It is a for-profit, centralized, social media company just like Twitter or Facebook. And sooner or later all of the journalists building a following there are going to find themselves trapped all over again when the investors come to collect their due, and the ol’ “engagement” knob gets cranked up up to “fighting equals profit” setting.

I actually don’t hate BlueSky. It represents an incremental improvement over 𝕏/Twitter. And I do understand the desire journalists have to recapture the halcyon days when it felt like the Internet had a “town square” (it didn’t, really) and they got to feel important by having large followings and rubbing digital elbows with celebrities and politicians.

If BlueSky is truly decentralized as they claim to be —if it is not one one thing owned and operated by a single company— it would be very easy to prove-by-example with the existence of a second BlueSky the same way there are thousands of “Mastodons” all working in conjunction. And yet, it’s 2025 and we still don’t have…

A compelling reason to purchase the Apple Vision Pro

Putting VR goggles on to play video games is a fun gimmick! Strapping VR goggles onto your face to do spreadsheets is an idea only a corporate executive would love. Not even Apple’s storied marketing department seems capable of explaining why someone would want to pay $3500 to do spreadsheets in 3-D (wait, is that the metaverse??). Perhaps in the future apple will invent an AI salesman capable of convincing anyone to buy anything. But right now it’s 2025 and we’ve yet to see…

An actual self driving car

OK, so I admittedly can’t know for sure if this one is true or not. Companies like Tesla have totally, blatantly, lied in the past on this topic. But you have to admit- isn’t it weird that if self driving cars have been totally real since 2016 that they can only be experienced as taxis and not be actually, yknow, purchased? The reason I suspect is because if someone were allowed to purchase their own “self driving” car, they could theoretically drive it somewhere without Internet access, quickly exposing the deception that those taxis are not AI-driven at all, but operated by remote humans workers. In fact, according to the New York Times, at at least one “driverless” car company, it takes more than one person per car to replace a single human driver:

Half of Cruise’s 400 cars were in San Francisco when the driverless operations were stopped. Those vehicles were supported by a vast operations staff, with 1.5 workers per vehicle. The workers intervened to assist the company’s vehicles every 2.5 to five miles.

(Emphasis mine)

The reason it’s cheaper to have one-point-five human drivers per car than the usual one, is because the “AI” companies realized that creating a real AI driver is a much more expensive enterprise than simply putting some cameras on an Internet-connected vehicle and outsourcing the labor of driving to places where labor is cheap.

Stuart McMillen wrote on the topic of human-at-a-distance “AI” back in 2019 using the spicy analogy that Thomas Jefferson didn’t install dumbwaiters to replace his slaves, but to hide them out of sight. Most of the time you see “AI powered” automation, from “self driving” cars, to “automated” checkouts, the work is not actually done by machines, but by hidden-away human beings in developing nations getting paid very little. I’ve read that there is a joke among these workers that “AI” really stands for “absent Indian”. Maybe next year the AI will work as promised, and robo-taxis won’t exploit people. On the plus side, it’s 2025 and we don’t have…

Limited options anymore.

Something I noticed more in 2024 than any other year, is that open, community-created software, apps and platforms are not only “more accessible than ever”, but just regular accessible. Signal is better than SMS texting while also being private, and easy enough that your grandparents will be able to use it. Linux on desktop has finally achieved (in my opinion) a better “just works” experience for most people than Windows or Macintosh, and Steam’s upcoming Linux-based OS could easily become the default option for gaming. Mastodon and Pixelfed provide a social web experience without ads, nefarious algorithms or venture capital investors eager to enshittify. Self hosting cloud apps is so much easier than it was even in 2023, requiring just a few clicks in most cases. You can self-host apps to replace iCloud, Google photos, Audible and streaming video. And there are also totally new things too, like an app to mute and auto-skip YouTube commercials on your TV! One day I will probably write about the world of self-hosting, but frankly it is all advancing so fast it’s hard for me to keep up.

The point however, is that having a cloud service for free doesn’t have to mean “ad supported” anymore. And while a lot of these apps aren’t as polished as the offerings from big tech, many have recently crossed the line into “perfectly cromulent” territory thanks to armies of volunteers collaborating openly and dedicating their spare time.

We’ve had options, but now we have good options. Wherever big tech is trying to squeeze you for pennies or attention, look to the open source community. Some programmer somewhere in the world was probably as fed up as you are and created a free (often better!) version of the thing. I have a hope that we’ll look back at 2025 as a banner year for advancements in alternatives to the big tech monopolies.

I believe this nation should commit itself to achieving the goal, before this decade is out, of moving our brains away from giant for-profit sanity-munching machines and onto quieter, community run software that works for us, not the other way around.

Not because it is easy, but because I am tired.

"Consumer, what a loser way to think of one’s self” - Cory Doctorow (paraphrased)

I actually did encounter an exception to this! Apparently people are paying others to create nonconsenual deepfake porn using AI. Consider me debunked!

If you want to know how much Amazon cares about this problem, they are “combating” AI slop by limiting ebook uploads to 3 per day. A totally normal pace for human writers.

Good to have you back Justin!