“What do you own, the world? How do you own disorder?” - Serj Tankian

What does it mean to say a platform is “toxic”? Most people seem to feel it to some degree, and few will say that they walk away from social media feeling better than before. Something unwanted is spreading from our devices into our brains, but what exactly makes something “toxic” as opposed to just being regular ol’ unpleasant?

It doesn’t feel correct to describe toxicity as simply “anything that can make someone feel bad”. News organizations often cover gruesome and depressing topics, but news (well, real news, at least) is not called “toxic”. On the other hand, a lot of social media sites are functionally “news” feeds, and those are often described as such, so what gives?

There is no one definition, but when it comes to mental health and setting boundaries, it’s helpful to assign clear labels so that we can better recognize them as they come up. Hopefully that means that when you finally put your phone down and get the icky feeling that even a shower won’t make clean, you can recognize not only what made you feel bad, but more importantly- how.

What we talk about when we talk about toxicity

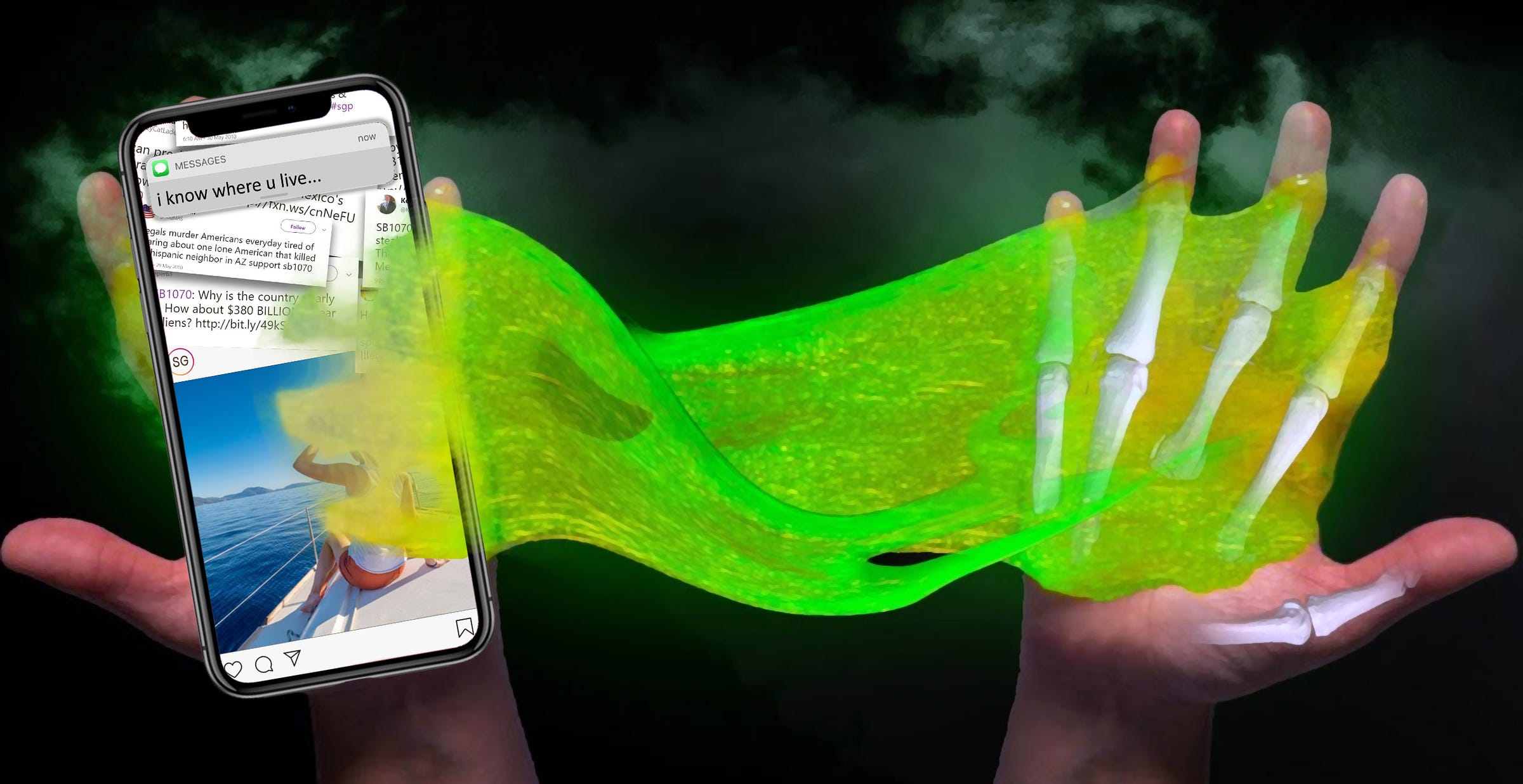

Visualize a green ooze with the consistency of maple syrup, that sticks to everything it can. The more you try to wipe it off, the more it gets all over. In small amounts it can be cleaned up in a short period of time, but frequent (or significant) exposure makes cleanup take longer.

Let’s define “toxic” to mean that after interacting with something, a part of it kinda sticks to you and continues to affect you in a negative way. And if you interact with it frequently enough, it’ll kinda soak in, at which point you become toxic too, and capable of spreading it further.

In the context of the internet, “toxicity” is when something gets inside of your head. It’s when the thoughts keep cycling afterwords for hours or days after you’ve put the phone down. When you’re not even scrolling anymore but might as well be.

Most content is not inherently toxic (more on that in a bit), often it’s the context in which it’s presented (by profit-maximizing people or profit-maximizing algorithms) that makes some types of content “stickier” than others.

So with that definition in mind- let’s examine the types of toxicity found online and the sorts of content usually associated with each.

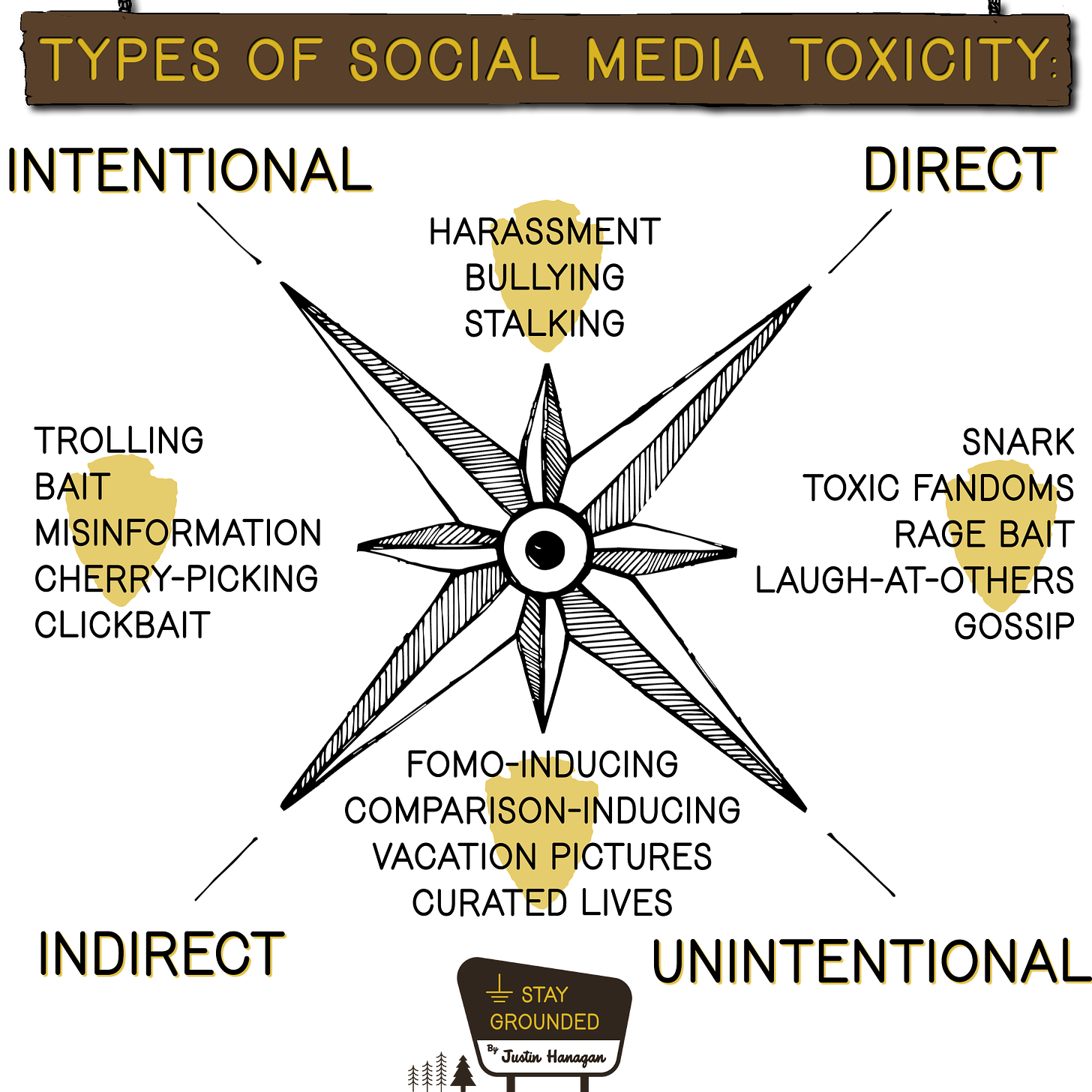

The four flavors of toxicity:

Intentional & Targeted - “Bullies”

The most obvious, and inherently toxic kind. When both the poster and the consumer know that one party is trying to elicit a negative emotion in another. Examples include:

Harassment

Bullying

Stalking

Pretty clear cut. Most platforms have a problem with individual harassment and bullying but most (to their credit) also have clear rules against it. Enforcement of harassment rules is another story, but at least most of the major platforms do articulate that it’s unwanted.

Intentional & Indirect - “Trolls”

Louis CK (Yeah, I know but hear me out) has an old bit about how he hates when people say “the n-word”, that it’s worse than saying the real word, “because now you’re making me think it!”. The joke is that when someone leads us to connect the dots instead of just saying the thing outright, it can feel like we’re the one introducing negativity into the conversation. Of course in reality it was the other person, but it is not always obvious in the moment, because the thought feels like it came from our own mind.

Intentionally toxic indirect content occurs when only one person, (the “poster”) knows that they are trying to elicit a reaction, often (but not always) by casting a wide net and waiting for responses. Examples:

Trolling

Bait

Sharing “triggering” content for reactions

Mis/disinformation meant to divide people or spread an ideology

Sensationalist clickbait or cherry-picked headlines.

In this category, one party is not being fully transparent about their attempts to influence the other. Indirect but intentionally toxic content often nudges users to “connect the dots”. To make them “think the n-word.”

Unintentional & Direct - “Rage candy”

Rage candy is what I call content that’s not intentionally to make the consumer feel bad, but is directly consumed by someone who “knows” what they’re getting into and willingly seeks it out. Think: the “laugh at others” stuff. The “Can you believe this idiot?” stuff. The “bad-but-feels-kinda-good-in-the-short-term” stuff.

“Snark” communities.

“Call out” accounts like LibsofTikTok

/r/idiotsincars, /r/trashy, /r/publicfreakout…

“Rage bait” communities

Many aspects of fandoms

A funny thing about emotions is that if you act on them, you feel them more. Acting on anger does not make the anger go away, it makes it stronger. Same goes for empathy. Regularly indulging in content that “others” other people (no matter how deserving of scorn they may be) will not make you more empathetic and understanding. Empathy begets empathy. Gratitude begets gratitude. Anger begets anger.

Content in this category directly invites users to look down on another person or group of people. It encourages elitism, leaning into negative emotions and closing one’s mind off to empathy. What makes consuming rage candy different than say- sympathizing with a friend who’s venting some steam, is that on the internet, the steam never ends. There’s always more content to perpetuate it. Anger —again, even when wholly justified— can become addictive, and when it does, it becomes self-sustaining.1

Unintentional & Indirect - “FOMO cues”

A FOMO cue is content that was not posted or consumed with the intention of making anyone feel bad, yet is still likely to induce a spiral of unwanted emotions.

An earnest post from your friend’s vacation that you subconsciously compare yourself to

Your old college roommate (who now makes twice your salary)’s new car.

Other people living their “best lives”

Content that nudges women into developing eating disorders.

The stuff that makes people feel “not good enough”.

It’s the indirect/unintentional variety that demonstrates that context, not just content is part of what makes interaction with a platform toxic. And if I may put on my tinfoil hat- I suspect this category is what social media companies might try to algorithmically maximize, because feelings of anxiety and inadequacy push people to buy more of what they see in ads2. All toxic content drives engagement, but unintentional/indirect content’s harmless appearance (both the poster and consumer want to increase positivity) provides plausible deniability when it comes to knowledge of any secondary harm inflicted.

Are the people operating these platforms are deliberately aiming to hurt their users for profit? Well, it’s probably not their primary goal. It’s more likely that employees are simply programming their algorithms to “maximize ad clicks”, and letting the algorithms do their thing while the humans in charge look the other way. We do know for certain that Facebook (as one example) was aware of (and mostly ignored) some of the harm they caused. It’s likely that other platforms are aware too, so it doesn’t feel right to let them off the hook.

These four types of toxicity exist offline too, obviously. Humans can be mean and manipulative to one another, or knowingly indulge in self-harming behaviors without the internet. But social (increasingly- recommendation) media platforms aim to make themselves addictive, which means that users continue to seek it out, and become regularly exposed.

It’s like how an occasional evening around a campfire is unlikely to induce lung cancer, but tiny, small amounts of daily smoke inhalation will sneak up on you.

It stands to reason that (to a degree) toxicity is enticing to users which likely explains why a large, popular “nontoxic” social media platform does not exist3. It just wouldn't be enticing enough to hook enough users fast enough to become self-sustaining.4

When we lean into an emotion, we feel it more. These platforms strongly encourage users to lean in (for “engagement”), and aside from direct harassment, it’s impossible to identify toxic content with absolute certainty. You can’t always know for sure if the other person is trying to make you feel bad, or if their content just happened to hit you at the wrong time. But you can know that it was targeted in a way to maximize your emotional reaction.

It’s very common for a regular consumer of “rage candy” to begin to produce like-minded content, and/or directly target (ie; bully/harass) the subject of scorn. Indeed- much of the same content in the “unintentional/direct” category would be considered “Direct and intentional” if the intended content consumer was the subject of rage, opposed to the community.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8199559/

I’ve actually made the case before that Wikipedia is in essence a reddit-style social media platform, albeit one with clearer site-wide guidelines and enough humans on "staff to moderate the moderators. It’s my belief that Wikipedia’s existence is strong evidence against the oft-repeated argument that social media platforms are “too big to moderate”, or that rampant toxicity is an inevitable result of anonymous free speech. This is not to imply that Wikipedia is flawless by any stretch, only that it’s success demonstrates that many of the pitfalls of social media are solvable (though likely not as profitable) if those in charge choose to address them.

It’s possible to imagine an algorithm so intelligent and so perfectly able to match tastes that it could serve up just the addictive parts of social media without the “carcinogens”. In the future will we see a “vape pen” form of social media? Is that much better?

"when people say “the n-word”, that it’s worse than saying the real word" This is exactly how I feel - but I've also seen the circuitous ways in which offensive language evolves. We've seen circles drawn around the negativity of the word "cripple" and its variants. We've seen "queer" move from meaning "unusual in an odd manner" to "nonconforming to a cisgender, heterosexual 'norm' of human identity and behavior", and some urban Americans of sub-Saharan African descent taking that "n" word and making it their own by writing its ending "-a" instead of "-er".

What you write of as "rage culture" has a name: schadenfreude. It means "getting enjoyment (happiness) from someone else's misfortune".